AIDE

Adaptive Multimodal Interfaces to Assist Disabled People in Daily Activities

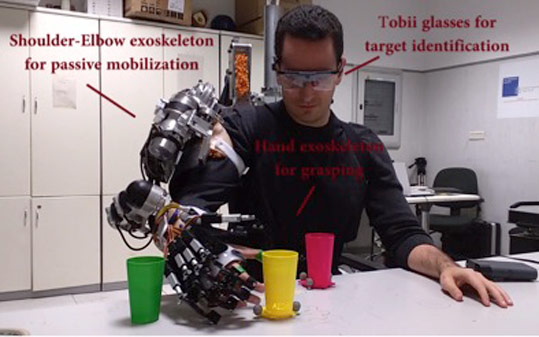

The objective of this project is the development of a modular and adaptive multimodal interface customizable to the individual needs of people with disabilities by human-machine interface devices.

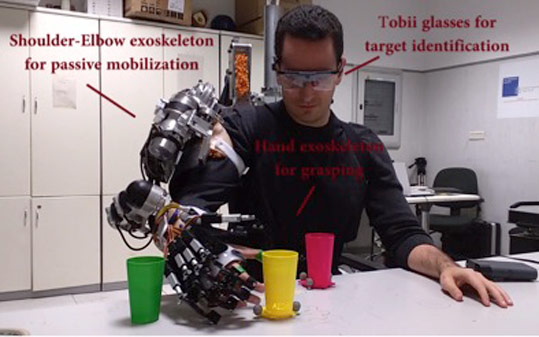

AIDE project has the ambition to strongly contributing to the improvement of the user-technology interface by developing and testing a revolutionary modular and adaptive multimodal interface customizable to the individual needs of people with disabilities. Furthermore, it focuses on the development of a totally new shared-control paradigm for assistive devices that integrates information from identification of residual abilities, behaviours, emotional state and intentions of the user on one hand and analysis of the environment and context factors on the other hand.

The AIDE project addresses different challenges. First, the development of a novel multimodal interface to detect behaviours and intentions of the user. Secondly, the implementation of a shared human-machine control system. Thirdly, the development of a modular multimodal perception system to provide information and support to the multimodal interface and the human-machine cooperative control. The system is composed of: brain machine interface (BMI) control based on EEG brain activity, wireless EMG surface interface, wearable physiological sensors to monitor physiological signals (such as, heart rate, skin conductance level, temperature, respiration rate, etc.), wearable ElectroOculographic (EOG) system, eye tracking module to identify the gaze point, an RGB and depth camera (such as Kinect) to recognize and track the user and objects and kinetic and dynamic information provided by upper-limb exoskeleton.

There exist different scenarios as possible targets for the AIDE system: drinking tasks, eating tasks, pressing a sensitive dual switch, making personal hygiene, touching another person, communication, control of home devices, entertainment and so on.

Partners